I am creating a skill that is forwarding the remainder portion of my utterance to another computer through a udp connection. The computer “daemon” will be running adapt - parser to parse the reminder in the daemon. The mycroft skill will parse the original command “ask the computer to…” Then pass on the remainder.

Not sure this is helpful or not. Good Luck. If I ever get some time I will be playing with the magic mirror project.

@pcwii Thanks for the idea. I’m currently trying to capture every utterance including when the wake word is detected. I just am not sure if that can be done in a skill. The UDP idea could really be useful with multiple devices I think. In my example, multiple mirrors. So I could say show weather on the entry way mirror, for example, and have a broadcast message to all mirrors, but have them programmed to listen for their own notification. Cool idea.

How would you see UDP working to display “Listening” for example, every time Mycroft hears the wake word? That’s my current dilemma.

The only way I can see this working is to modify the wake word listener code to send a UDP packet once the wake word is triggered. Maybe somewhere around when the listening sound is played. Not sure in the code where this is and if we can find a clean method to enable / disable this in the mycroft config we may be able to submit a pull request for it. I actually feel there is some value in creating a process to “echo” the sst to other devices so that it can be processed there. This would permit a workable communications channel to/from other “mycroft compatible” devices. It is fairly easy to remotely send mycroft commands using websockets (https://github.com/pcwii/testing_stuff/blob/master/send_to_mycroft.py) but I don’t think it is as easy to intercept commands it is processing.

You should be able to move those things into your skill by listening for the wake words, STT result and the speak messages on the bus.

This is done using self.add_event(MESSAGE_TYPE, self.handler_method) where MESSAGE_TYPE is a string and self.handler_method is a method you create for handling the message.

The message types for the above cases (if I’ve understood them correctly) is:

Wakeword detected: “recognizer_loop:record_begin”

STT result: “recognizer_loop:utterance”

Mycroft speaks: “speak”

It would look something like this in your skill:

[...]

def initialize(self):

self.add_event('recognizer_loop:record_begin', self.handle_listen)

self.add_event('recognizer_loop:utterance', self.handle_utterance)

self.add_event('speak', self.handle_speak)

def handle_listen(self, message):

voiceurl = 'http://192.168.3.126:8080/kalliope' #<----- Here you can use self.settings['ip']

voice_payload = {"notification":"KALLIOPE", "payload": "Listening"}

r = requests.post(url=voiceurl, data=voice_payload)

def handle_utterance(self, message):

utterance = str(event['utterances'])

utterance = utterance.replace("['", "")

utterance = utterance.replace("']", "")

voiceurl = 'http://192.168.3.126:8080/kalliope' #<----- again with self.settings

voice_payload = {"notification":"KALLIOPE", "payload": utterance}

r = requests.post(url=voiceurl, data=voice_payload)

def handle_speak(self, message):

voiceurl = 'http://192.168.3.126:8080/kalliope'

voice_payload = {"notification":"KALLIOPE", "payload": utterance}

r = requests.post(url=voiceurl, data=voice_payload)

The speed will still be about the same as previously though…

@forslund You are the MAN!!! Dude, that is exactly what I was hoping for!! I’m having a few issues with the latest update for MagicMirror (my current install is broken - badly!). Once I revert back to a previous image I will give this a try.

Thanks Åke, you really are the MAN!

@forslund thanks to your suggestion here is the code that ended up working to display both what Mycroft hears and what Mycroft says, along with a wake word “listening” indicator.

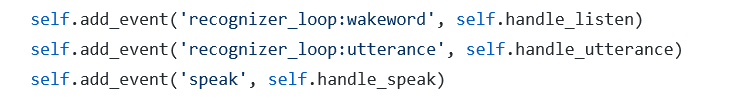

def initialize(self)

self.add_event('recognizer_loop:wakeword', self.handle_listen)

self.add_event('recognizer_loop:utterance', self.handle_utterance)

self.add_event('speak', self.handle_speak)

def handle_listen(self, message):

voice_payload = {"notification":"KALLIOPE", "payload": "Listening"}

r = requests.post(url=self.voiceurl, data=voice_payload)

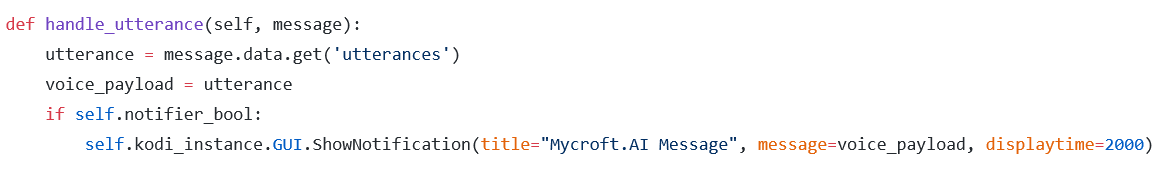

def handle_utterance(self, message):

utterance = message.data.get('utterances')

voice_payload = {"notification":"KALLIOPE", "payload": utterance}

r = requests.post(url=self.voiceurl, data=voice_payload)

def handle_speak(self, message):

speak = message.data.get('utterance')

voice_payload = {"notification":"KALLIOPE", "payload": speak}

r = requests.post(url=self.voiceurl, data=voice_payload)

The only issue that I am having is that the user utterance gets displayed on the mirror after the text of what Mycroft says. I am not sure if there is a way to send the user utterance to the mirror before Mycroft’s speak utterance so that it makes more sense. I’m not sure if there is an earlier messagebus event during which I could capture the user utterance. When I get time, I will try doing another short video to demonstrate what the skill does now. The good news is that it’s not a core-hack anymore and the requests.post happens from the skill itself. Although it still is delayed from how fast Mycroft reacts to the utterance.

Thanks again for your guidance!

@dmwilsonkc,

Congratulations on the amazing progress on your MagicMirror skill. I have a question about capturing the utterances. I have used your code snippet above to provide notifications to kodi in my kodi-skill and have noticed that I will receive the message "Listening!, and I will receive mycroft’s response but what I don’t see are my utterances. Are you having the same issue or do your utterances get returned in the event?

thanks,

@pcwii Thanks. So, I am sort of having the same problem. I haven’t quite figured out why, but my utterances are being displayed after the Mycroft utterances in most cases. The only time my utterances are displayed before Mycroft’s are when Mycroft has to “get information” to answer my request. So for example, when I say “What’s the weather for tomorrow?” and Mycroft has to go to the internet to get the answer, my utterance appears first. But if the response is either hard coded or in a dialog file, Mycroft’s utterance appears first, then my utterance follows. Also, the “action” happens before anything. So for example, if I say “Hey Mycroft” the chirp sounds to indicate the listening state, I respond “hide clock”, the “listening” notification then appears, the MagicMirror clock gets hidden, then Mycroft responds with one of the pre-configured dialog responses audibly, Mycroft’s response appears in text on the mirror followed by my utterance.

I am not quite sure why the timing of each event is being processed at different rates. If I had to guess after a quick browse of the as much code as I could peruse, it might have something to do with the converse() portion of the code when handling the user utterances. It looks like there is a programmed delay before the utterance is passed to the adapt intent parser. That is just a guess and may not be the case at all.

The other peculiarity is that when I hacked the /home/pi/mycroft-core/mycroft/client/speech/main.py

and the /home/pi/mycroft-core/mycroft/client/text/main.py as i did in reply 56 above, it worked perfectly with no timing issues other than it was just delayed a little from Mycroft’s actual audible response as you can see in the video I posted. The user utterance appeared before Mycroft’s utterance every single time. So maybe it has something to do with the way my skill processes the messagebus notifications. @forslund, do you have any ideas?

In my case I am seeing the listing notification then the response, but never the utterance. Not sure why at this point.

@pcwii Did you copy the code? Or type it? The reason I ask is it needs to be

utterance = message.data.get('utterances')

utterances with an ‘s’ at the end. It doesn’t work without the ‘s’. I typed it wrong the first time myself.

@pcwii The only reason I could thinks of at this point is your if statement. If it is not triggered, obviously it would not send the notification. Try it without the if statement to see if it works. Then you’ll know.

utterance = message.data.get(‘utterances’) <---- shouldn’t this be utterance (without s)?

I saw what @dmwilsonkc wrote but in a skill of mine it looks like this:

response = message.data.get(“utterance”)

And it seems to work just fine

based on the examples I understood…

message.data.get(utterance) is for what Mycroft speaks to me and

message.data.get(utterances) is what I speak to Mycroft

at this point I am receiving what Mycroft speaks, but not what I am speaking.

@Luke Yes, when using the:

self.add_event('recognizer_loop:utterance', self.handle_utterance)

the correct code is:

def handle_utterance(self, message):

utterance = message.data.get('utterances')

with the ‘s’.

The documentation is here:

Normally in a skill you would use your code example, but when using the self.add_event, that particular event uses ‘utterances’ with an s.

At least that’s what I had to do to get it to work for me.

my bad misunderstanding on my side, sorry

my bad misunderstanding on my side, sorry

No problem, it really stymied me for a while. I couldn’t figure out what the problem was until I read the docs.

Hi all, Just wanted to give you an update on the magic-mirror-voice-control-skill. I’m just about done making little additions. I’ve changed the code slightly to try and adjust the text of user and mycroft utterances that appear on the mirror. In my above post, I had mentioned that the user utterances occasionally appear after mycroft’s response to those utterances. I tried to use a different messagebus notification to send mycroft’s utterance a little later. While it did not completely solve the problem, it did in many instances. Here’s the code change:

def intitialize(self)

self.add_event('recognizer_loop:wakeword', self.handle_listen)

self.add_event('recognizer_loop:utterance', self.handle_utterance)

self.add_event('speak', self.handle_speak)

self.add_event('recognizer_loop:audio_output_start', self.handle_output)

def handle_listen(self, message):

voice_payload = {"notification":"KALLIOPE", "payload": "Listening"}

r = requests.post(url=self.voiceurl, data=voice_payload)

def handle_utterance(self, message):

utterance = message.data.get('utterances')

voice_payload = {"notification":"KALLIOPE", "payload": utterance}

r = requests.post(url=self.voiceurl, data=voice_payload)

def handle_speak(self, message):

self.mycroft_utterance = message.data.get('utterance')

def handle_output(self, message):

voice_payload = {"notification":"KALLIOPE", "payload": self.mycroft_utterance}

r = requests.post(url=self.voiceurl, data=voice_payload)

If anyone has any ideas on how I could speed up the user utterances just a bit, that would completely fix the issue.

Cheers!

Hey everybody! So… At this point I have put the finishing touches on the magic-mirror-voice-control-skill. The next step will be to post to skill testing and feedback and create a video of its latest abilities. While I have not completely solved the “user utterances being displayed after Mycroft’s response thing”, I was able to figure out how to change the length of time the utterances are displayed on the mirror based on how long they are. So for instance, if Mycroft has a lot to say, like listing all the modules installed on the mirror, that text stays on the screen until Mycroft stops speaking. By using the recognizer_loop:‘audio_output_end’ messagebus notification I send a notification to the kalliope module “REMOVE_MESSAGE”. Then added a bit of code to the kalliope module to process the notification like so:

if (notification == "REMOVE_MESSAGE"){

// When Mycroft signals the AUDIO_OUTPUT_END remove the message from the screen

this.messages.splice(0, this.messages.length);

That way the text will stay on the screen till Mycroft is done speaking instead of some arbitrary time limit.

As I mentioned, I will post in Skill Feedback and hopefully we can have someone duplicate my results.

Cheers!

It has come to my attention that I should include a link to the testing and feedback page for this skill. You can find it here along with some instructions.

Testing and Feedback for Mycroft-MagicMirror Voice Control Skill

Cheers!