Thanks for the quick response. I managed to get the socket working, but I also think that some piece is missing for a good user experience. The case is this:

We use a Mycroft skill to create a connection with a Rasa Chatbot via socketio. So far, we used their REST API but the code became quite complex and I thought real-time message exchange would be a more reliable option to exchange messages too. Our conversations (same session) can have 20+ turns. We use a customized Mycroft App (websocket) + a customized Mycroft core + our own Mycroft skill + one Rasa chatbot with the socketio endpoint.

Our skill has an intent handler that handles the first user utterance that actiates the skill.

- It connects the user to the socket endpoint,

- uses get_response to a) say “I’m listening” and b) wait for the user’s response.

- “calls” the socket sending the second user message (the one after activation).

The intent handler code defines how to respond to incoming events - it speaks the Rasa bot response.

The skill also has a converse-method to handle all utterances after the first two (activation utterance + first question). It does not use the get_response method; instead, it calls the socket endpoint sending the users utterance. The converse method contains the code how to respond to the incoming events, i.e. it speaks the Rasa bot response.

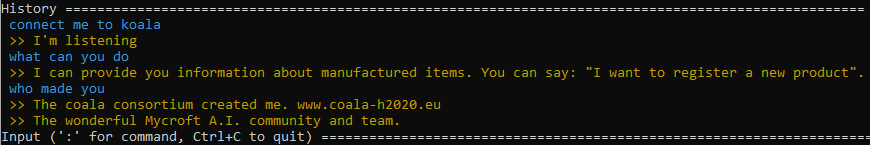

I can have conversations with the bot like in the attached image (there is a bug in my code though that the message is not properly consumed (see last turn where Mycroft answers two times to my question).

The big problem is, that the converse method for our skill must handle any kind of user utterance (because users could direct any question to the Rasa chatbot). The only way I see how to get out of an infinte conerse mode with our skill is to deactivate the skill after saying a stop word. I could not get this working so far, i.e. making the skill inactive.

A timeout is one option too, but it cannot be the only way. In our use case, users may need several minutes to continue with the same conversation: they follow instructions given by the assistant and report back when they are done. Without the converse method, users always need to re-activate the skill manually.

Conceptuall, we may well run into a problem that humans have too when they participate in group conversations - how does a participant know that you address them  . In this case, the other participants are skills. Some of them get simple commands (e.g. timer) while others need to do multiple tasks or complex ones that require lots of context information (longer conversations).

. In this case, the other participants are skills. Some of them get simple commands (e.g. timer) while others need to do multiple tasks or complex ones that require lots of context information (longer conversations).

Maybe it is possible to weight all skills, i.e. a skill that has a command and control behavior (e.g timer or send email) is ranked higher while “conversational interface skills” are lower. The higher ranked skills always process messages before the lower ranked ones.

The downside is, that my Rasa skill might have a timer skill too that would never be activated this way  That means conversational interface skills should communicate (with metadata) what they can do to Mycroft. If there is a conflict, Mycroft should ask which skill you want to use.

That means conversational interface skills should communicate (with metadata) what they can do to Mycroft. If there is a conflict, Mycroft should ask which skill you want to use.

The fluent transition between skills is indeed a complex issue, especially if we want super smooth interactions.